|

In a nutshell, web scraping is the process of taking stuff from the web programmatically and often at scale. This involves specialized libraries as well as some understanding of how websites are structured, to separate the useful data from any markup and other stuff found on a web page. Web scraping is very useful, especially if you are looking at updating your dataset based on a particular online source that's publicly available but doesn't have an API. The latter is something very important and popular these days, but it’s beyond the scope of this article. Feel free to check out another article I’ve written on this topic. In any case, web scraping is very popular too as it greatly facilitates data acquisition be it for building a dataset from scratch or supplementing existing datasets. Despite its immense usefulness, web scraping is not without its limitations. These generally fall under the umbrella of ethics since they aren’t set in stone nor are they linked to legal issues. Nevertheless, they are quite serious and could potentially jeopardize a data science project if left unaddressed. So, even though these ethical rules/guidelines vary from case to case, here is a summary of the most important ones that are relevant for a typical data science project:

It’s also important to keep certain other things in mind when it comes to web scraping. Namely, since web scraping scripts tend to hang (usually due to the server they are requesting data from), it's good to save the scraped data periodically. Also, make sure you scrape all the fields (variables) you need, even if you are not sure about them. It's better to err on the plus side since you can always remove unnecessary data afterward. If you need additional fields though, or your script hangs before you save the data, you'll have to redo the web scraping from the beginning. If you want to learn more about ethics and other non-technical aspects of data science work, I invite you to check out a book I co-authored earlier this year. Namely, the Data Scientist Bedside Manner book covers a variety of such topics, including some hands-on advice to boost your career in this field, all while maintaining an ethical mindset. Cheers!

0 Comments

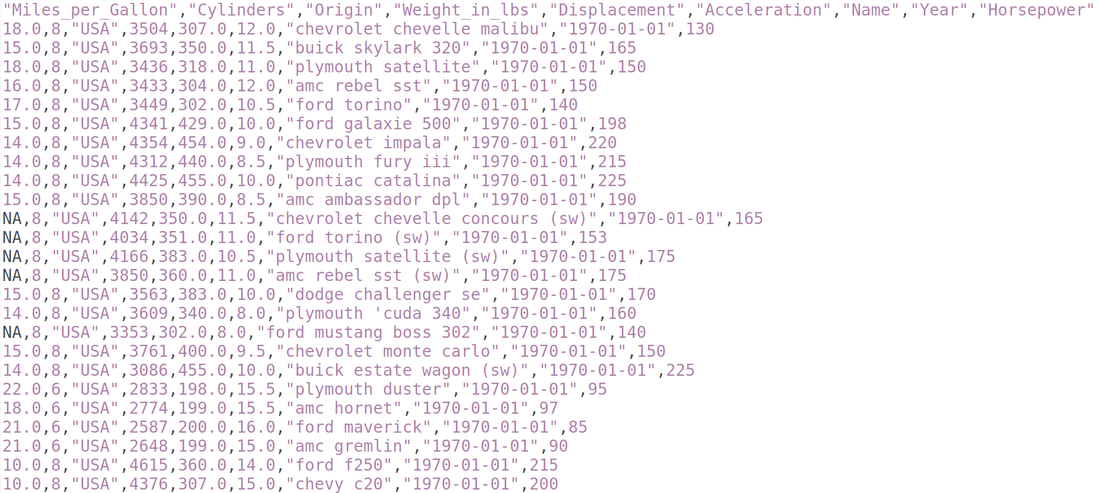

Text analytics is the field that deals with the analysis of text data in a data science context. Many of its methods are relatively simple since it's been around for a while now. However, more modern models are quite sophisticated and involve a deeper understanding of the texts involved. A widespread and relatively popular application of text analytics is sentiment analysis, which has grown significantly over the past few years. Also, its evolution, which involves the understanding of a text's tone and other stylistic aspects, has made it possible to assess text in different ways, not just as a positive-negative sentiment only. The sophistication of text analytics is mainly due to artificial intelligence in it, especially deep learning (DL) and natural language processing (NLP). The latter ensures that the text is appropriately evaluated, taking into account syntax and grammar and parts-of-speech and other relevant domain knowledge of linguistics. It also employs heuristics like the TF-IDF metric and frequency analysis of n-grams (combining n different terms forming common phrases). All these yields a relatively large feature set that needs to be analyzed for patterns before a predictive model is build using it. That's where DL comes in. This form of A.I. has evolved so much in this area that there exist DL networks nowadays that can analyzed text without any prior knowledge of the language. This growth of DL makes text analysis much more accessible and usable, though it's most useful when you combine DL with NLP. The usefulness of text analytics, mainly when A.I. is involved, is undeniable. This usefulness has driven change in this field and advanced it more than most data science use cases. From comparing different texts (e.g., plagiarism detection) to correcting mistakes, and even creating new pieces of text, text analytics has a powerful niche. Of course, it's always language-specific, which is why there are data scientists all over the world involved in it, often each team working with a particular language. Naturally, English-related text analytics has been more developed, partly because it's more widespread as a language. Because of all that, text analytics seems to remain a promising field with strong trends towards a future-proof presence. The development of advanced A.I. systems that can process and, to some extent, understand a large corpus attest to that. Nowadays, such A.I. systems can create original text that is truly indistinguishable from the text written by a human being, passing the Turing test with flying colors. A great application of text analytics that is somewhat futuristic yet available right now is Grammarly. This slick online application utilizes DL and NLP to assess any given text and provide useful suggestions on improving it. Whether the text is for personal or professional use, Grammarly enables it to deliver the message you intend it to, without any excessive words. Such a program is particularly useful for people who work with text in one way or another and are not super comfortable with English. Even the free version of it is useful enough to make it an indispensable tool for you. Check it out when you have the chance by installing the corresponding plug-in on your web browser! Data security is a topic I’ve talked about in most of my books over the years and even made videos about (unfortunately these videos are no longer available as the contract with the platform has expired). In any case, as it’s an important topic I’ll continue talking about it. After all, it concerns all data professionals, including data scientists. Data security is essential because it affects the usability of your models as well as the people involved in your projects. I'm not talking about just the shareholders but also the people behind the data involved. Say you have some personally identifiable information (PII) in your dataset, for example. Do you think the people this information corresponds to would be pleased if it got compromised, e.g. by a hacker? What about the accountability of the models? Securing your data is no longer a nice-to-have but something of an obligation, especially whenever sensitive information is involved. Fortunately, you can secure your data in various ways. Encryption and back-ups are by far the most popular methods, though other cybersecurity techniques such as steganography can also be applied. Also, for each method, there are variants that you can consider, such as the different encryption algorithms, the various back-up schemata, etc. Usually, a cybersecurity professional can assess your needs and provide a solution for your data, though it's not far-fetched to obtain the same services from a tech-savvy data scientist too. What about the cost of all this? After all, if you are to implement a cybersecurity solution that’s the first question you’d be asked by the stakeholders. The cost is broken down into two main parts: hardware- and software-related. As for the former (which tends to be the larger part), it involves the purchase of specialized equipment (e.g. a firewall node in your computer network, or a back-up server). The software part involves specialized software, such as the one responsible for your encryption, intrusion detection, etc. Also, this category includes any software-as-a-service solution you may purchase (usually through a subscription) for software that lives on the cloud. Software handling DDoS attacks, for example, is commonplace and often comes as an add-on for any web hosting package you have for your site. Naturally, some of this software may have nothing to do with your data (e.g. the aforementioned DDoS attack prevention) but it can help keep any APIs you have up and running, serving processed data to your users and clients. A good rule-of-thumb for assessing a cybersecurity module and its relevance to a data-related project is the usefulness time for the data at hand. If the data is going to be obsolete (stale) in a few months perhaps you don't need the latest and greatest encryption module, while if the data is available in other places with a small fee (so it's mostly an ETL effort to get it on your computers), then back-up systems may not need to follow the most advanced schema. Beyond these cybersecurity matters, there are other considerations that are useful to have, which however are beyond the scope of this article. Suffice to say that this is a topic worth considering and discussing with your colleagues as it is crucial in today’s data-driven world where the security of digital assets is as important as physical security. Nowadays, there is a lot of confusion between the fields of Statistics and Machine Learning, which often hinders the development of the data science mindset. This is especially the case when it comes to innovation since this confusion can often lead someone to severe misconceptions about what's possible and the value of the data-driven paradigm. This article will look into this topic and explore how you can add value through a clearer understanding of it. First of all, let's look at what statistics is. As you may recall, statistics is the sub-field that deals with data from a probabilistic standpoint. It involves building and using the mathematical models that describe how the data is distributed (distributions) and figuring out the likelihood of data points being part of these distributions through these functions. Many heuristics are used, though they are called statistic metrics or just statistics, and the whole field is generally very formalist. When data behaves as expected and when there is an abundance of it, statistics works fine, while it also yields predictive models that are transparent and easy to interpret or explain. Now, what about machine learning? Well, this is a field of data analytics that involves an alternative approach to handling data, one that's more data-driven. In other words, machine learning involves analyzing the data without any ad-hoc notions about it, in the form of distributions or any other mathematical frameworks. That's not to say that machine learning doesn't involve math, far from it! However, the math in this field is used to analyze the data through heuristic-based and rule-based models, rather than probabilistic ones. Also, machine learning ties in very well with artificial intelligence (A.I.), so much so that they are often conflated. A.I. has a vital role in machine learning today, so the AI-based models often dominate in this field. As you may have figured out by now, the critical difference between statistics and machine learning lies in the fact that statistics makes assumptions about the data (through the distribution functions, for example) while machine learning doesn't. This difference is an important one that makes all the difference (no pun intended) in the models' performance. After all, when data is complex and noisy, machine learning models tend to have better performance and generalize better. Perhaps that's why they are the most popular option in data science projects these days. You can learn more about the topic by checking out my latest book, which covers machine learning from a practical and grounded perspective. Although the book focuses on how you can leverage Julia for machine learning work, it also covers some theoretical concepts (including this topic, in more depth). This way, it can help you cultivate the right mindset for data science and broaden your perspective on the data science field. So, check it out when you have a moment! Cloud computing has taken the world by storm lately, as it effectively democratized computing power and storage. This has inevitably impacted data science work too, especially when it comes to the deployment stage of the pipeline. Two of the most popular methods for accomplishing this is through containers and micro-services, both enabling your programs to run on some server somewhere with minimal overhead. The value of this technology is immense. Apart from the cost-saving that derives from the overhead reduction, it makes the whole process easier and faster. Getting acquainted with a container program like Docker isn't more challenging than any other software a data scientist uses, while there are lots of docker images available for the vast majority of applications (including a variety of open-source OSes). Cloud computing in general is quite accessible, especially if you consider the options companies like Amazon offer. The key value-add of all this is that a data scientist can now deploy a model or system as an application or an API on the cloud, where it can live as long as necessary, without burdening your coworkers or the company’s servers. Also, through this deployment method, you can scale up your program as needed, without having to worry about computational bandwidth and other such limitations. Cloud computing can also be quite useful when it comes to storage. Many databases nowadays are available on the cloud, since it's much easier to store and maintain data there, while most cloud storage places have quite a decent level of cybersecurity. Also, having the data live on the cloud makes it easier to share it with the aforementioned data products deployed as Docker images, for example. In any case, such solutions are more appealing for companies today since not many of them can afford to have their own data center or any other in-house solutions. Of course, all this is the situation today. How are things going to fare in the years to come? Given that data science projects may span for a long time (particularly if they are successful), it makes sense to think about this thoroughly before investing in it. Considering that more and more people are working remotely these days (either from home due to COVID-19, or from a remote location because of a lifestyle choice), it makes sense that cloud computing is bound to remain popular. Also, as most cloud-based solutions become available (e.g. Kubernetes), this trend is bound to continue and even expand in the foreseeable future. Hopefully, it has become clear from all this that there are several angles to a data science project, beyond data wrangling and modeling. Unfortunately, not many people in our field try to explain this aspect of our work in a manner that's comprehensible and thorough enough. Fortunately, a fellow data scientist and I have attempted to cover this gap through one of our books: Data Scientist Bedside Manner. In it, we talk about all these topics and outline how a data science project can come into fruition. Feel free to check it out. Cheers! JSON stands for JavaScript Object Notation, and it's one of the most popular data file formats, not just for Java but all programming languages. It involves semi-structured data, just like XML, but most data professionals, including data scientists, prefer it. But why is it so popular, and how is it useful in data science work? In this article, we'll explore just that. The usefulness of JSON lies in the fact that it's versatile and relatively concise. What's more, it's faster than other similar file formats, while it's already widely used for web-related applications, making it easy to find mature programming libraries for it. Moreover, JSON is very intuitive, and many text editors have built-in functionality for viewing such files in an easy-to-read way. Furthermore, it's easy to create and edit JSON files yourself using a text editor, while programmatically, it's a walk in the park. JSON’s compatibility with NoSQL databases is one of its fortes. Such systems include databases like MongoDB, which are quite popular in data science. Most new databases are also compatible with JSON as it's become a kind of standard. Additionally, JSON and the dictionary data structure go hand-in-hand, something vital in data science work. So, if you want to load some data from a JSON file, you can store it in a dictionary, while if you have a dataset (any dataset), you can code it as a dictionary (each variable being a key) and store it as a JSON file. The JSON.jl library in Julia is one worth knowing about, especially if you want to use this programming language in your data science work. This fairly simple package enables you to parse and create JSON files, using the primitive Dict structure. A convenient library to know, even if it's still in version 0.21.x. JSON.jl makes use of the FileIO package on the back-end and its most useful functions are parse(), parsefile(), and print(). Note that the latter works different data structures, not just dictionaries. The JSON file format is closely linked to APIs too. The latter are particularly useful in various data-related applications and are instrumental in certain data products developed by data scientists. Also, many APIs are essential for acquiring data, so knowing about them goes without saying. APIs are ideal for proof-of-concept projects, too, as they don't require too much work to get one up-and-running. As a result, they are a versatile tool for all sorts of projects, particularly those with a web presence. The API Success book describes this technology in sufficient depth, without getting too technical. Besides, if you understand APIs' usefulness and how they fit into the bigger picture, it's not too hard to learn the technical aspects too, through a tutorial, for example. Note that you can get a 20% discount on this and any other book available at the publisher's website using the coupon code DSML. Using this code will also help me out, so you can see it as a way to support this blog. Cheers! Every data set is a multi-dimensional structure, a crystallization of information. As such, it can best be described through mathematics, particularly Geometry (the science of measuring Earth and the foundation of many scientific fields, such as Physics). You may have a different perspective about Geometry, based on your high school education, but let me tell you this: the field of Geometry is much more than theorems and diagrams, which although essential, are but the backbone of this fascinating field. However, Geometry is not just theoretical but also very practical. The fact that it applies to the data we deal with in data science attests to that. When it comes to Geometry in data science, a couple of metrics come to mind. Namely, there is the Index of Discernibility (ID) and Density (there is also an optimizer I've developed called DCO, short for Divide and Conquer Optimizer, but it doesn't scale beyond 5 dimensions so I won't talk about it in this article). Both of these metrics are useful in assessing the feature space and aiding various data engineering tasks, such as feature selection (ID can be used for evaluating features) and data generation (through the accurate assessment of the dataset's "hot spots"). Also, both work with either hyperspheres (spheres in the multidimensional space) or hyper-rectangles. The latter is a more efficient way of handling hyperspaces so it's sometimes preferable. Metrics like the ones mentioned previously have a lot to offer in data science and A.I. In particular, they are useful in evaluating features in classification problems and the data space in general (in the case of density). Naturally, they are very useful in exploratory data analysis (EDA) as well as other aspects of data engineering. Although ID is geared towards classification, Density is more flexible than that since it doesn't require a target variable at all. Note that Density is largely misunderstood since many people view it as a probability-related metric, which is not the case. Neither ID nor Density has anything to do with Statistics, even if the latter has its own set of useful metrics. Beyond the aforementioned points, there are several more things that are worth pondering upon when it comes to geometry-based metrics. Specifically, visualization is key in understanding the data at hand and whenever possible, it's good to combine it with geometry-based metrics like ID and Density. The idea is that you view the data set both as a collage of data points mapped on a grid as well as a set of entities with certain characteristics. The ID score and the density value are a couple of such characteristics. In any case, it's good to remember that geometry-based metrics like these can be useful when used intelligently since there is no metric out there that's a panacea for data science tasks. So what's next? What can you do to put all this into practice? First of all, you can learn more about the Index of Discernibility and other heuristic metrics in my latest book, Julia for Machine Learning (Technics Publications). Also, you can experiment with it and see how you can employ it in your data science projects. For the more adventurous of you, there is also the option of coming up with your own geometry-based metrics to augment your data science work. Cheers! Delimited files are specialized files for storing structured (tabular) data. They are uncompressed and easy to parse through a variety of programs, particularly programming languages. Delimited files are widely used in data analytics projects, such as those related to data science. But what are they about, and why are they so popular? There are various types of delimited files, all of which have their use cases. The most common ones are commas separated variables (CSV) files and tab-separated variables (TSV). However, any character can be used to separate the various values of the variables, such as the pipe (|) or the semicolon (;). All of the delimited files are similar, though, in the sense that they contain raw data organized through the use of a delimiter (the character as mentioned earlier). Note that the variable names are included in the delimited file in many cases, usually in the top row. Like the rest of the file, that row also has its values (in this case, the variables) separated by the delimiter. Delimited files are super useful in data science work and data analytics work in general. They are straightforward to produce (e.g., most software has the "export as CSV" option available) and easy to access since they are, in essence, just text files. Also, every data science programming language has a library for loading and saving data in this file format. The fact that many datasets are available in this format is a consequence of that. Additionally, if a delimited file is corrupt, it's relatively manageable to pinpoint the problem and correct it. Yet, even if the problem is unfeasible to remedy, you can still access the healthy part of the file and retrieve the data there. In the case of specialized data files involving compression, this isn't possible most of the time. In Julia, there is a CSV library called CSV.jl (very imaginative name, I know!). Although its functionality is relatively basic, it is super fast (much faster than the Python equivalent), while it has excellent documentation. Despite what the name suggests, this library can be used for delimited files, not just CSVs. The parameter "delim" is responsible for this, though you don't need to set it always, since the corresponding function can figure out the delimiter character on its own, most of the time. Delimited files are instrumental in data science work, but they aren't always the best option. For example, in cases when you are dealing with semi-structured or unstructured data, it's best to use a different format for your data files, such as JSON. Also, suppose you are working with NoSQL databases. In that case, delimited files may not be useful at all, since the data in those databases are best suited for a dictionary data structure, such as that provided by JSON and XML files. If you want to learn more about this topic and other topics related to data science work, feel free to check out my book Data Science Mindset, Methodologies, and Misconceptions. In this book, I talk about all data science-related matters, including data structures and such, providing a good overview of all the material related to this fascinating field. So, check it out when you have the chance. Cheers! Optimization is the methodology that deals with finding the maximum or the minimum value of a function that's usually referred to as the objective (or fitness) function. It often involves the use of derivatives and calculus techniques, but nowadays it has to do with other, more efficient algorithms, many of which are AI-related. What’s more, optimization plays a crucial role in all modern data science models, particularly the more sophisticated ones (e.g. ANNs, SVMs, etc.). But what is gradient descent and why is it such a popular optimizer? Gradient descent (GD) is a deterministic optimizer that given a starting point (initial guess) it navigates the solution space based on the gradient of that point. The derivatives are calculated either analytically (through the corresponding function) or empirically through limits of the fitness function. It's similar to descending a valley (or climbing a hill in the case of a maximization problem), by moving towards the part of it near your starting point where it's steeper and always adjusting your course accordingly. Due to its simplicity and high performance, gradient descent is one of the most popular optimizers in its category. Let’s now look at some (somewhat better) alternatives to GD, deterministic, and otherwise. Let's start with the stochastic ones since they are the ones more commonly used these days. The reason is that most optimization problems involve lots of variables and deterministic optimizers can't handle them, or they take too long to find a solution. Common stochastic optimizers used today include Particle Swarm Optimization (PSO), Simulated Annealing, Genetic Algorithms, Ant Colony Optimization, and Bee Colony Optimization. PSO in particular is quite relevant since other optimizers are often variants of PSO (e.g. the Firefly optimizer). All of these methods are adept at handling complex problems, sometimes with constraints too, outperforming GD. As for deterministic optimizers, there are a couple of them I've developed in the past year, one of which (Divide and Conquer Optimizer) is particularly robust for low-complexity problems (up to 5 variables). The best part is that none of the optimizers mentioned here require the calculation of a derivative, which can be a computationally heavy process (in some cases not even possible). One thing that’s important to keep in mind and which I cannot stress enough is how optimization is key for data science, esp. in AI-based models. It's something so common that it's hard to imagine any sophisticated machine learning model without an optimization process under the hood. Also, optimizers can be quite useful in data engineering tasks particularly those involving feature selection and other problems involving a large solution space. You can learn more about optimization and AI in general through a book I have co-authored a couple of years back, through Technics Publications. It's titled AI for Data Science: Artificial Intelligence Frameworks and Functionality for Deep Learning, Optimization, and Beyond, and it's accompanied by code notebooks in Python and Julia. Feel free to check it out. Cheers! |

Zacharias Voulgaris, PhDPassionate data scientist with a foxy approach to technology, particularly related to A.I. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed