|

Lately, we've put together a new survey to better understand this area and the main pain points associated with it. It would be great if you could contribute through a response and perhaps through sharing this survey with friends and colleagues. Cheers! https://s.surveyplanet.com/arw5bz4a This is the second part of the Data Literacy for Professionals article

Examples of Data Literacy in Practice Data literacy adds value to an organization in various ways, one of which is to support decision-making. Say you need to create a new marketing campaign to increase customer retention, for example. Where would you start? Would you just come up with a few random ideas, select the most promising ones, and run with it? A data literate professional would take a step back, examine the data at hand, see if there was a way to collect additional data, if necessary, and then work with some analyst to make sense of it all. Then, upon contemplating the insights gathered from this analysis, he would find some practical alternatives and discuss them with the marketing team (which might be even his immediate subordinates). Afterward, he might want to dig deeper into a specific option or two and after examining the corresponding data and insights further, come up with a data-backed decision to present to the marketing executives. Another example of how data literacy would add value to decision-making could be UI enhancement, a vital part of the UX. For example, say that the site doesn't get many people to click on the "buy" button. Someone identifies this problem by observing how the sales funnel is and brings it to your attention. You then explore various alternatives for making the "buy" button easier to stand out, perhaps experimenting with its color, size, etc. Which option would you go with, however? If you are data literate, you get the help of a data scientist to design an experiment, run it, and come up with some insights as to which option yields a better click-through rate. This analysis can involve some statistical tests too. You'd then think about all this, evaluate its reliability, and decide on the most promising option for the button based on the results of this analysis. This mini-project can help improve the UI and possibly the whole UX, driving more sales for the company. New products and services can also come about through data literacy. For instance, you could have a new analytics service for the users made available either on the website (if each user has an account there) or via an API. Perhaps even something geared towards a more personalized interface, based on the user's behavior on the website, an interface that can even change dynamically. All these may seem like magic to someone unaware of the potential of data but for a data-literate professional, especially someone with a bit of imagination, they are as commonplace as a coffee shop. In the competitive world we live in, getting an edge is paramount and something that data literacy can enable. Data literacy can help solve problems faster and more reliably, come up with new viable products/services based on data, and even bring about a more efficient and transparent culture in the organization, one based on a data-driven mindset. Additionally, data literacy can help make the most of the specialists involved in data work, improve employee retention (for these and other information workers), and transform the organization into a place that keeps up with the latest tech developments. An organization like this can foster more contentment and efficiency in its employees and enable them to commit long-term. A place like this can help individual professionals in many ways while at the same time benefiting other people involved, such as users and customers. In times like these, when the economy and the market are unstable, such improvements can bring about stability and even growth on both an individual and a collective level. Final thoughts Data literacy isn't a nice-to-have, not anymore anyway, but a necessity for any organization that wants to keep its place in today's increasingly digital world. A company comprising data-literate people, especially managers and other knowledge workers, can leverage resources it didn't know it had because they are in the form of data. Also, it can make it more agile as it can explore acquiring additional data resources and using them to improve existing data-related processes and services further. This company's competitors are probably doing this already, so it's no longer a luxury to invest in data literacy and use it to cultivate a data culture and make data-driven decisions. Data literacy also helps individual professionals through a series of specific improvements. For example, a mid-level manager can become more data literate and have a more modern approach to tackling the problems her team faces. Additionally, she can hire new members or train existing ones so that they can become more competent in handling and analyzing data to tackle the tasks in their workflows. Maybe even discover new ways to deal with bottlenecks and other issues that hinder efficiency. All that progress might eventually manifest as a promotion for that person and other people on her team. And if that person decides to leave that organization, she can have more options in future work placements and even new roles, thanks to her data literacy ability. Overall, data literacy is a powerful tool, or mindset and skill-set to be more accurate, for leveraging data and making better decisions accordingly. It is very hands-on and benefits people and organizations in various ways. It involves understanding data and how we govern it, analyzing it effectively, presenting and communicating the insights discovered, and protecting data/information, among other things. It entails understanding where privacy fits and taking actions to keep data related to people's identities under wraps. Data literacy is closely linked to data strategy and having a data culture in the organization. Being literate about data can also give professionals a competitive advantage over their data-illiterate colleagues and make a company more agile and relevant in the market. Especially when it comes to complex problems and difficult decisions, it can help bring about more reliability and objectivity to the whole matter. This leveling up can enhance the organization overall, along with everyone associated with it, be it partners, vendors, and, most importantly, its customers and users. To learn more about data literacy, feel free to explore the corresponding page of this site. Cheers! Introduction

Different people mean different things when they talk about data literacy. For this article, so that we are on the same page, let's use the definition of "the ability to create, manage, read, work with, and analyze data to ensure & maximize the data's accuracy, trust and value to the organization" (D. Marco, Ph.D.). Note that this definition highlights a crucial characteristic of data literacy which entails coherency and collaboration within the organization, something that often reflects a particular kind of culture. I'm referring to the data culture, which is an integral part of data strategy, merging the business objectives and plans with the data world where data becomes a kind of asset. All that's great, but it may seem a bit abstract. However, data literacy is very hands-on, even if it's not as low-level work as analytics. It is also utterly significant for all sorts of professionals, particularly decision-makers. Many people talk about making data-driven decisions and having a data-driven approach to problem-solving. How many of them do it, though, and to what extent? Well, data literacy enables professionals to do just that and make data something they value and leverage for the benefit of the whole. Data literacy is beneficial for other people too. For instance, when someone works in a data-literate organization, there tends to be more transparency about how decisions are made and what different pieces of data mean. So, if you have a role that involves data in some shape or form, you can be sure that it's not a black box and that you can learn from it. Naturally, this implies that you are data literate to some extent too! Data literacy is a state of mind, a way of thinking and acting about data. As such, it has many benefits that depend on the organization and the data available to it. The fact that many companies base their entire business model on data attests to the fact that data is crucial as an asset. To unlock its value, however, you need data literacy. Key Ideas and Concepts of Data Literacy Let's delve deeper into this by looking at the components of data literacy. For starters, data literacy involves understanding data and how it is governed. This part of data literacy is vital since many organizations have lots of data that is essentially useless because it's in silos and inaccessible to those who need it. This problem is essentially a data governance one. Also, as much of it involves personally identifiable information (PII), it has to abide by specific regulations such as GDPR. Otherwise, this data may be a huge liability. Data literacy also involves analytics, as it is when data is turned into information that it truly becomes useful. The latter we can understand better and reason with, especially in decision-making. Data in its original form is usually understandable only by computers. Analytics makes this transformation and enables others to benefit from the data. Usually, analytics work is handled by specialists, such as data scientists. Data literacy also involves presenting and communicating data. This part of data literacy often entails reasoning about insights and exploring how they can apply to an organization's challenges. Otherwise, data has just ornamental value, which may not be enough to justify people working with it. Perhaps that's why today every data professional is assessed based on communication skills too, not just technical ones. Finally, data literacy involves protecting the data and whatever information it spawned. It's usually specialized cybersecurity processes that ensure the protection aspect of data literacy, which also includes preserving the privacy of the people behind that data. In larger organizations, there may even be specialized professionals involved in this kind of work. What does a data-literate professional look like, though? For starters, it's not like he stands out from the crowd. But when that person engages in a conversation on a business topic, it becomes clear that they know how data can be used as an evergreen asset. Such a person may also undertake responsibilities related to the use of data in decision-making, be it through a data-driven marketing initiative, a cohort analysis of the customers or users, etc. A data-literate professional can undertake numerous roles, not just those related to hands-on data work. He can be a competent team leader, a business liaison, a consultant, and even an educator, promoting a data culture in the org. As long as that person has a solid understanding of data and how the organization can put the data available into good use, that person is a data literate professional and can add value through that. Generally, data-literate professionals are very competent in leveraging data for making decisions and driving value in the org. This aspect of data literacy often involves having a sense of data and its potential. For someone else, data may be just something abstract and interesting to data professionals only. For the data-literate professional, however, it's something as powerful as a product sometimes. At the same time, it's a pleasant challenge because just like products need work before you can trade them for money, data also requires special treatment. A data-literate professional accepts this challenge and works towards making it a reality. This special treatment may involve getting the right people in a team or leveraging the existing ones, doing some mentoring even, and turning this understanding of data into a set of processes that transform data into something of value. When it comes to data literacy, there are several challenges most professionals change. I say most because people with a data background tend to find this whole matter intuitive and relatively easy. However, people who come from different backgrounds tend to struggle with data literacy in various ways. After all, traditional education stems from a time when data wasn't something educators knew or cared about. Their data literacy skills were rudimentary at best, while they focused on educating people about those business models and concepts that were more relevant back then. That's not to say that business acumen isn't that important. It's more important, however, when it's integrated with data acumen (as Bernard Marr eloquently illustrates in his book and courses on data strategy). Data literacy is a journey for most professionals, and there are different levels to it. Maintaining a sense of humility about this matter and understanding there is always more to learn can go a long way. This isn't an easy task, especially for accomplished professionals who got far in their careers using traditional ways of thinking about assets and business processes. Perhaps through proper coaching, mentoring, and other educational tools, they can overcome the challenges that plague this journey toward complete data literacy. Data literacy is crucial in today's digital economy. As data is what some refer to as the new oil, the prima materia of many products, data literacy is the equivalent of an oil-based engine. The main difference is that it doesn't pollute and there are no practical limitations on the fuel! Nevertheless, it's not trivial as some data people make it out to be. Of course, you can plug this data into some off-the-shelf model and get it to spit out some results that you can put into some slick presentation and share with the stakeholders. However, this is often not enough or relevant. Data literacy helps people see how the data relates to the business objectives, tackling specific problems and answering particular questions. Having some fancy data model may be something interesting to boast about, but if it doesn't help the organization with its pain points, it seems like an ornament rather than something of value. Going back to our metaphor, it's more like a gadget than an engine that can help us traverse the distance between where we are as an organization and where we need to be. To be continued... In the meantime, feel free to learn more about data literacy in the corresponding page of this site. At the end of last month, OpenAI released its latest product, which made (and still makes) waves in the AI arena. Namely, about three weeks ago, ChatGPT made its debut, and before long, it gathered enough traction to outshine its predecessor, GPT3. Since then, many people have started speculating on it and making interesting claims about its capabilities, role in society, business value, and future. But what about the human aspects of this technology? How can ChatGPT affect us as human beings and professionals in the next few years?

Let's start with the latter, as it's generally easier to understand. Whether you identify as a data professional (particularly one in Analytics), a Cybersecurity expert, or just someone interested in these fields, ChatGPT will affect you. As its amoral, it may not understand how any given actions are bound to have consequences on other people, so if you become obsolete because of its work in these areas, it's hard to blame it. After all, it was just trying to be helpful! And all those people who are becoming gradually more addicted to free services, free advice, and anything that doesn't part them from their cash may be bound to feel an attraction to this technology. It's not just translators and digital artists that have real problems with this software, through the rapid increase of the supply and the lowering of the prices of their services as a consequence. If someone could get insights or cybersecurity advice from ChatGPT, it's doubtful they'd consider paying you for the same services plus the additional burden of dealing with a human being. After all, we are all flawed in some way that may trigger others, while the AI system may seem relatively perfect. As resources are becoming more scarce by the year, it's not far-fetched to expect technologies like this one to get a larger share of the market in analytics and cybersecurity services soon. How many people do you know who can tell the difference between some good advice and some not-so-good one when the latter is phrased in flawless English and in a personalized way? And how many people have the maturity to appreciate and opt for the former, just out of principle? As for the effects of ChatGPT on us as human beings, these are also not too promising either. If someone is used to getting whatever they want without paying anything, it's only natural that this person would become spoiled, a taker of sorts, with a growing appetite for more free services. I don't know about you, but I find that I am better off being surrounded by people who are givers or at least matchers instead of takers (I've experienced plenty of the latter in my life, especially as a student). Now, this psychological corrosion may not happen tomorrow or even next year, plus it's unfair to assign all the blame to ChatGPT since other similar technologies do the same. However, what ChatGPT does that no other software has managed before is democratize this free stuff (at least to its users) and give the illusion of knowing everything well enough. In other words, a ChatGPT user may feel that other people are unnecessary and that this software is sufficient for all their knowledge needs. This subjective matter may be untrue, but good luck convincing that person that this is not a good option for them or the world overall! Technologically, ChatGPT may be a brilliant product and one that can open new avenues of research toward a more futuristic society. However, without preserving the human aspects of the world, all this technology is bound to backfire and do more harm than good. Not because suddenly the AI may decide to dominate us, but because we as a species may not be ready for this tech and the rapid changes it can (and probably will) incur. To make things worse, the cost of this tech, although not factored in by the futurists and the evangelists of OpenAI, is still there. It seems paradoxical to me that we try to conserve energy, even in the winter months, because of the rising cost of gas and electricity, even though we allow such an energy-hungry tech to consume large amounts of energy (computational power isn't cheap!). As I don't wish to end this article on a sad note, I'd like to invite you to ponder solutions to this moral problem (something that I sincerely doubt this or any other AI is particularly good at). For additional inspiration, I'd recommend the book The Retro Future by John Michael Greer, which covers this complex topic of progress and technology much better than I can. Maybe ChatGPT is an opportunity for us all to view things from a different (hopefully more holistic) perspective and develop some Natural Intelligence to complement the Artificial Intelligence out there. Cheers. Don't let the somewhat philosophical title mislead you! This isn't an article for the abstract aspects of the world, but something pointing to a very real problem in our thinking and how it makes experiencing life difficult. Namely, an age-old issue with our logic, reasoning, and how as a data modeling tool, it fails to capture the essence of the stuff it aims to understand. In other words, it's a data problem that influences how we process information and understand life in its various aspects. In more practical terms, it is the loss of information through predefined categories that may or may not have relevance to life itself.

The problem starts with how we reason logically. In conventional logic, there are two states, true or false. It's the simplest possible way of thinking about something, assuming it's simple enough to break it down into binary components. If we look at a particular plant, for example, we can say that it's either alive or dead. Fair enough. Of course, this approach may not scale well for a group of such plants (e.g., a forest). Can a forest's state be reduced to this all-or-nothing categorization? If so, what do we sacrifice in the process? Things get more complicated once we introduce additional categories (or classes in Data Science). We can say that a particular plant is either a sprouting seed (still well within the ground), a newly blossomed plant (above the ground too, but just barely), a mature plant (possibly yielding seeds of its own), or a piece of wood that's lying there lifeless. These four categories may describe the state of a plant in more detail and provide better insight regarding how the plant is fairing. But whether these categories have any real meaning is debatable. Most likely, unless you are a botanist, you wouldn't care much about this classification as you may come up with your own that is better suited for the plant you have in mind. Naturally, the same issue with scalability exists with this classification also, as it's not as straightforward as it seems. A forest may exist in various states at the same time. How would you aggregate the states of its members? Is that even possible? In analytics, we tend to avoid such problems as we often deal with continuous variables. These variables can be aggregated very easily and we can teach a computer to reason with them very efficiently, perhaps more efficiently than we do. So, a well-trained computer model can make inferences about the plants we are dealing with based on the continuous variables we use to describe those plants. Practically, that gives rise to various sophisticated models that appear to exhibit a kind of intelligence different from our conventional intelligence. This is what we refer to as AI, and it's all the rage lately. So, where does conventional (human) intelligence end and AI begin? Or, to generalize, where do any categories end and others begin? Can we even answer such a question when we are dealing with qualitative matters? Perhaps that's why we have this inherent need to quantify everything, especially for stuff we can measure. But how does this measurement affect our thinking? It's doubtful that many people stop to ask questions like that. The reason is simple: it's very challenging to answer them in a way agreeable to many people. This lack of consensus is what gave rise to heuristics of all sorts over the years. We cannot reason with complexity and sometimes we just need to have a crisp answer. Heuristics provide that for us, empowering us in the process. These shortcuts are super popular in data science too, even if few people acknowledge the fact. There is something uncomfortable with accepting uncertainty, especially the kind that's impossible to tackle definitively. Some things in nature, however, aren't black and white or conform to whatever taxonomy we have designed for them. They just are, giddily existing in some spectrum that we may choose to ignore as it's easier to view them in terms of categories. Categories simplify things and give us comfort, much like when we organize our notes in some predefined sections in a notebook (physical or digital) for easier referencing. The problem with categories arises when we think of them as something real and perhaps more important than the phenomena they aim to model. John Michael Greer makes this argument very powerfully in his book "The Retro Future," where he criticizes various things we have taken for granted due to the nature of our technology-oriented culture. But why are categories such a big problem, practically? Well, categories are by definition simplifications of something, which would otherwise be expressed as a continuum, a spectrum of values. So, by applying this categorization to it, we lose information and make the transition to the original phenomenon next to impossible. Additionally, categories are closely related to the binary classification of things since every categorical variable can be broken down into a series of binary variables. In the previous plant example, we can say that a plant is or isn't a sprouting seed, is or isn't a newly blossomed plant, etc. The cool thing about this, which many data scientists leverage, is that this transition doesn't involve any information loss. Also, if you have enough binary variables about something, you can recreate the categorical variable they derived from originally. All this mental work (which to a large extent is automated nowadays) makes for a very artificial worldview where everything seems to exist in a series of ones and zeros, trues and falses, veering away from the complexity of the real world. So, it's not that the world itself is very limited, but rather our logic and as a result our perception and our mental models of it that is limited. As a well-known data professional famously said, "all models are wrong, but some are useful" (George Box). My question is: "how much accuracy are we willing to sacrifice to make something useful from the information at hand?" If you find that heuristics are a worthwhile option for dealing with the complexity of life effectively, you'd be intrigued by how far they can go in data science and AI. There are plenty of powerful heuristics which can simplify the problems we are dealing with without information loss, all while bringing about interesting insights and new ways of applying creativity. My latest book, The Data Path Less Traveled, is a gentle introduction to this topic and is accompanied by lots of code to keep things down-to-earth and practical. Check it out! Due to the success of the Analytics and Privacy podcast in the previous few months, when the first season aired, I decided to renew it for another season. So, this September, I launched the second season of the podcast. So far, I have three interviews planned, two of which have already been recorded. Also, there are a few solo episodes too, where I talk about various topics, such as Passwords, AI as a privacy threat, and more. Since I joined Polywork, many people have connected with me on various podcast-related collaborations, some of which are related to this podcast. So, expect to see more interview episodes in the months to come.

There is no official sponsor for this season of the podcast yet, so if you have a company or organization that you wish to promote through an ad in the various episodes (it doesn’t have to be all the remaining episodes!), feel free to contact me. Cheers! As much as I'd love to write a (probably long) post about this, I'd rather use my voice. So, if you are interested in learning more about this topic, check out the latest episode of my podcast, available on Buzzsprout and a few other places (e.g., Spotify). Cheers!

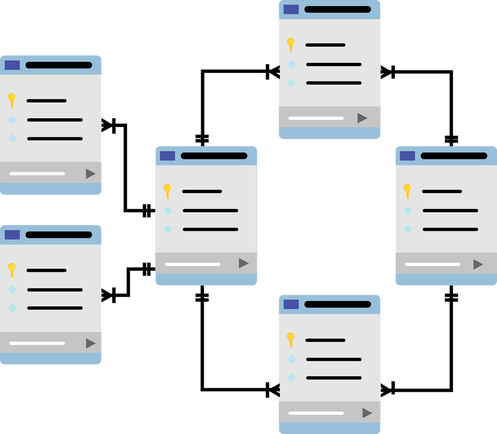

Creating Diagrams and Unconventional Graphics for Data Science and Data Analytics Work – Revisited7/5/2021 I realize that I’ve done this topic before, but perhaps it needs some more attention, as it’s a very useful topic. Diagrams are great, but they are also challenging. As for the other graphics (particularly those not generated by a plotting library), these can be tough too. But both diagrams and these unconventional graphics are often essential in our line of work, be it as data scientists or data analysts. Let's examine the hows and whys of all this. First of all, diagrams and graphics, in general, are a means of conveying information more intuitively. When you look at a table filled with numbers and other kinds of data, you need to think about them, and sometimes you have to know something about the context of all this. With diagrams, you may get an idea of the underlying information even if you don't know much about the context. Of course, the latter can help bring about scope and perspective, helping you interpret the diagram better and make it more applicable to the task at hand. Diagrams and unconventional graphics are paramount in presentations too. Imagine going to a client or a manager with just a code notebook at your disposal! Even if they may appreciate you having done all this work chances are that you'll need more than that to get them on your side and see the real value behind all these ones and zeros! Besides, the adage of "a picture is a thousand words" is valid, even in Analytics work. Data modelers have figured that out a long time ago, which is why diagrams are their bread and butter. Perhaps there is something to be learned from all this. But how do you go about creating diagrams and unconventional graphics in general? After all, graphics design is a challenging discipline, and it's not realistic to try to do this kind of work without lots of studying and practicing. Also, it's doubtful we'll ever be as good as graphics professionals who often have the talent to drive their know-how. Still, we can learn some basics and create decent-looking diagrams and graphics, to facilitate our data science endeavors. For starters, we can invest in learning a program like GIMP. This software is an open-source version of Photoshop, and it's well-established and documented. So, if you have a good image or graphic to work with, GIMP can make it shine. Also, programs like LibreOffice Draw can be practically essential for this sort of work, especially if you want to build something from scratch. Contrary to what some people think, creating graphics is very detailed work, not some artistic endeavor. You need to use both your analytical and your imaginative faculties for such a task, even if the imagination part may seem dominant, at least in the beginning. So, for any graphics-related tasks, remember, zooming in is your friend! As for the properties box of any graphical object, that's your best friend! Anyway, I could go on and talk about graphics in data science and data analytics work all day, but it’s not possible to do this topic justice in a single blog post. Besides, the best way to learn is by practicing, just like when it comes to building and refining data models for your Analytics work. Cheers! Many people talk about strategy nowadays, from the strategy of a marketing campaign to business strategy, and even content strategy. However, strategy is a more general concept that finds application in many other areas, including data science. In this article, we'll look at how strategy relates to data science work, as well as data science learning. Strategy is being able to analyze a situation, create a plan of action around it, and following that plan. Strategy is relevant when there are other people (players) involved, as it deals with the dynamics of the interactions among all these people. It's a vast field, often associated with Game Theory, the brainchild of John Nash, considered to be one of the best modern Mathematicians (he even won the Nobel prize for this work, once his work's applications in Economics were discovered). In any case, strategy is not something to be taken lightly, even if there are more lighthearted applications of it out there, such as strategy games, something about which I'm passionate. Strategy applies to data science too, however, as the latter is a complex matter that also involves lots of people (e.g., the project stakeholders). Thinking about data science strategically is all about understanding the risks involved, the various options available, and employing foresight in your every action as a data scientist. It's not just a responsible role (esp. when dealing with sensitive data) but also a role crucial in many organizations. After all, in many cases, it's us who deliver insights that effect changes in the organization or bring about valuable (and often profitable) products or services, which the organization can market to its clients. Strategy in data science is all about thinking outside the box and understanding the bigger picture. It's not just the datasets at hand that matter, but how they are leveraged and used to build valuable data products. It's about mining them for insights significant to the stakeholders instead of coming up with findings of limited importance. Data science is practical and hands-on, just like the strategies that revolve around it. Strategy in data science is also relevant to how we learn it. We may go for the more established option of doing a course on it and reading a textbook or two that the instructor recommends. However, this is just one strategy and perhaps not the best one for you. Mentoring is another strategy that's becoming increasingly important these days since it's more hands-on and personal in the sense that it addresses specific issues that you as a learner have throughout your assimilating of the newfound data science knowledge. Another powerful strategy is videos and quizzes that provide you with valuable knowledge and know-how, which enable you to get a more intuitive understanding of a data science topic. Of course, there is also the strategy of combining two or more such strategies for a more holistic approach to data science learning. Choosing a strategy for your data science work or your data science learning isn't easy. This matter is something you often need to think about and evaluate over several days. In any case, usually data science educational material can help you in that and can also supplement your work, enriching your skill-set. Some such material you can find among the books I've published as well as the video courses I've created (e.g., those on Cybersecurity). I hope they can help you in your data science journey and make it easier and more enjoyable. Cheers! Data modeling of data architecture is the discipline that deals with how data is organized, how various (mostly business-related) processes express themselves as data flows, and how we leverage data to answer business-related questions. It involves some basic analytics (the stuff you'd do to create a pivot table, for example) but no heavy-lifting data analysis, like what you'd find in our field. There is no doubt that data modeling benefits from data analytics a great deal, but the reverse is also true. Let's explore why through a few examples. First of all, data modeling is fundamental in the structure of the data involved (data architects often design the databases we use) and the relationships among the various datasets, especially when it comes to an RDBS architecture. However, they also work with semi-structured data and ensure that the data is kept accessible and secure. Over the past few years, data modelers also work on the cloud, ensuring efficiency in how we access the data stored there, all while keeping the overall costs low. So, it's next to impossible to do any data-related work without consulting with a data architect. Since data modeling is the language these professionals speak, we need to know it, at least to some extent. Data modeling also involves generating reports based on the data at hand. These reports may need to be augmented using additional metrics, which may not be very easy to compute with the conventional analytics tools (slicing and dicing methods). So, we may need to step in there and build some models to make these metrics available for these reports. Before we do, however, we need to know about their context in the problem at hand. This context is something some knowledge of data modeling can help provide. Apart from these two cases, there are other scenarios where we need to leverage data modeling knowledge in our pipelines. These, however, are project-specific and beyond the scope of this article. In any case, having the right mindset in data science (and data analytics in general) is crucial for bridging the gap between our field and data modeling. This is something I explore in all of my books, particularly the Data Science Mindset, Methodologies, and Misconceptions one. So, check it out when you have a moment. Cheers. |

Zacharias Voulgaris, PhDPassionate data scientist with a foxy approach to technology, particularly related to A.I. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed