|

Introduction

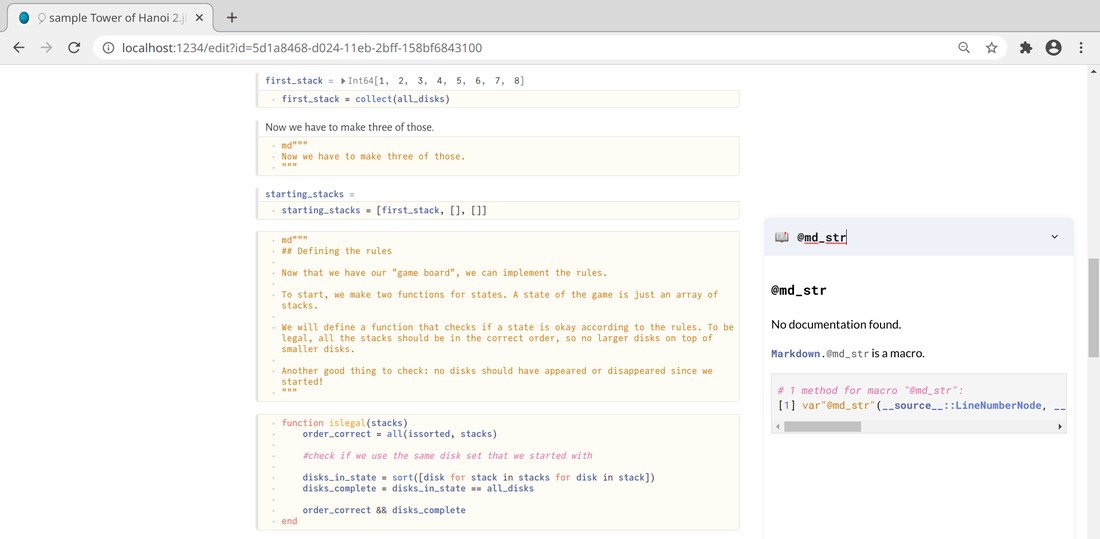

Code notebooks have become a necessity for anyone doing any serious work in this field. Although they don't have the same functionality as IDEs, they are instrumental, especially if you want to showcase your code. Also, it's something that has evolved quite a bit over the years. This notebook covered in this article is seemingly the latest development of this evolution, at least for the Julia programming language, in data science work. Don’t You Mean Jupyter Notebooks? I understand why someone would think that. After all, Jupyter notebooks are well-established, and I've often used them myself over the years. However, this code notebook I write about is an entirely different animal (you could say another world altogether!). Neptune notebooks have little to do with Jupyter ones, so if you go to them expecting them to be just like Jupyter but better, you'd be disappointed. However, if you see them for what they are, you may be in for a surprise. A Voyage through the Solar System of Code Notebooks Neptune notebooks are essentially Julia scripts rendered on a web browser. At the time of this writing, this browser is usually Chrome or some variant of it (e.g., Chromium) and Firefox. However, The latter browser isn't ideal for particular tasks, such as printing (even if it's PDF printing). A Neptune notebook can run on Julia, even if you don't have the Neptune.jl library installed. However, if you do, you can load the notebook on your browser and have Julia running in the background, just like in the Jupyter notebooks. However, unlike its more established brother, the Neptune notebook only supports Julia, particularly the later versions of the language. Also, the layout is quite different, and at first, it may seem off-putting if you are used to the elegance and refined interface of Jupyter notebooks. Neptune notebooks are rudimentary, perhaps even minimalist, compared to Jupyter ones. However, because of this, they are far more stable and efficient. In fact, in the few months that I've been using Neptune notebooks, I've never had it crash on me, not once. Also, authentication errors are rare and only happen if you try to run a Neptune notebook on both Firefox and Chromium simultaneously. The notebook seems to lock onto the browser you start it in, usually the default browser. Wrinkles like this will hopefully be ironed out in future versions of the Neptune library. Despite them, however, these notebooks are quite slick and their support of markdown is a noteworthy alternative to the text cells of Jupyter notebooks. Perhaps overall, they are geared towards the more advanced users at the time of this writing. Hopefully, this is something that will change if more data scientists embrace this technology. By the way, technically Neptune is a form of the Pluto.jl library, which enables Pluto notebooks. However, the latter, although quite interesting, aren't designed for data science work and I'd not recommend them. If, however, you are a Julia programmer who wants to use something different, Pluto is a good alternative. Just don't try to do a data science project on them before getting some insurance policy on your computer, since it's likely you are going to physically break it, out of frustration! Final Thoughts The development of code notebooks is fascinating, and Neptune seems to be a respectable addition to all this. As there aren't any decent tutorials at the moment that I can point you to, I suggest you play around with such a notebook yourself to see if it does it for you. If you want to see one such code notebook in action, you can check out my Anonymization and Pseudonymization course I've published relatively recently on WintellectNow. All the coding in it is on a Neptune notebook, which is the hands-on part of that mini-course. Cheers.

0 Comments

Many people talk about strategy nowadays, from the strategy of a marketing campaign to business strategy, and even content strategy. However, strategy is a more general concept that finds application in many other areas, including data science. In this article, we'll look at how strategy relates to data science work, as well as data science learning. Strategy is being able to analyze a situation, create a plan of action around it, and following that plan. Strategy is relevant when there are other people (players) involved, as it deals with the dynamics of the interactions among all these people. It's a vast field, often associated with Game Theory, the brainchild of John Nash, considered to be one of the best modern Mathematicians (he even won the Nobel prize for this work, once his work's applications in Economics were discovered). In any case, strategy is not something to be taken lightly, even if there are more lighthearted applications of it out there, such as strategy games, something about which I'm passionate. Strategy applies to data science too, however, as the latter is a complex matter that also involves lots of people (e.g., the project stakeholders). Thinking about data science strategically is all about understanding the risks involved, the various options available, and employing foresight in your every action as a data scientist. It's not just a responsible role (esp. when dealing with sensitive data) but also a role crucial in many organizations. After all, in many cases, it's us who deliver insights that effect changes in the organization or bring about valuable (and often profitable) products or services, which the organization can market to its clients. Strategy in data science is all about thinking outside the box and understanding the bigger picture. It's not just the datasets at hand that matter, but how they are leveraged and used to build valuable data products. It's about mining them for insights significant to the stakeholders instead of coming up with findings of limited importance. Data science is practical and hands-on, just like the strategies that revolve around it. Strategy in data science is also relevant to how we learn it. We may go for the more established option of doing a course on it and reading a textbook or two that the instructor recommends. However, this is just one strategy and perhaps not the best one for you. Mentoring is another strategy that's becoming increasingly important these days since it's more hands-on and personal in the sense that it addresses specific issues that you as a learner have throughout your assimilating of the newfound data science knowledge. Another powerful strategy is videos and quizzes that provide you with valuable knowledge and know-how, which enable you to get a more intuitive understanding of a data science topic. Of course, there is also the strategy of combining two or more such strategies for a more holistic approach to data science learning. Choosing a strategy for your data science work or your data science learning isn't easy. This matter is something you often need to think about and evaluate over several days. In any case, usually data science educational material can help you in that and can also supplement your work, enriching your skill-set. Some such material you can find among the books I've published as well as the video courses I've created (e.g., those on Cybersecurity). I hope they can help you in your data science journey and make it easier and more enjoyable. Cheers! There is a certain kind of information in the world of data that makes it possible to identify particular individuals personally. In other words, there is a way to match a specific person to a data record based on the data alone. Such data is referred to as personally identifiable information (PII), and it's crucial when it comes to data science and data analytics projects. After all, PII's leakage would put those individuals' privacy at risk, and the organization behind the data could get sued. In this article, we'll look at a couple of popular methodologies for dealing with PII. Fortunately, Cybersecurity as a field was developed for tasks like this one. Anything that has to do with protecting information and privacy falls under this category of methods and methodologies. Since PII is such an important kind of information, several cybersecurity methodologies are designed to keep it safe and the people behind this information. The most important such methodologies are anonymization and pseudonymization. These methodologies aim to either scrap or conceal and PII-related data, securing the dataset in terms of privacy. Let’s start with anonymization. This Cybersecurity methodology involves scrapping any PII from a dataset. This methodology involves any variables containing PII (e.g., name, address, social security number, financial information, etc.) or any combination of variables closely linked to PII (e.g., medical information with general location data). Although this can ensure to a large extent that PII is not abused, while it also makes the dataset somewhat lighter and easier to work with, it's not always preferable. After all, the PII fields may contain useful information for our model, so discarding it could distort the dataset's signal. That's why it's best to use this methodology for cases when the PII variables aren't that useful, or they contain very sensitive information that you can't risk leaking out. As for pseudonymization, this is a Cybersecurity methodology that entails the masking of PII through various techniques. This way, all the relevant information is preserved in some form, although deriving the original PII fields from it is quite challenging. Although this Cybersecurity methodology is not fool-proof, it provides sufficient protection of any sensitive information involved, all while preserving the dataset's signal to a large extent. A typical pseudonymization method is hashing, whereby we hash each field (often with the addition of some "salt" in the process), turning the sensitive data into gibberish while maintaining a one-to-one correspondence with the original data. Beyond anonymization and pseudonymization, several other Cybersecurity methodologies are worth knowing about, even if you only delve in data science work. If you want to learn more about this topic, including how it ties in the whole Cybersecurity ecosystem, you can check out my latest video course: (Fundamentals of) Anonymization and Pseudonymization for Data Professionals on WintellectNow. So, check it out when you have a chance. Cheers! Data modeling of data architecture is the discipline that deals with how data is organized, how various (mostly business-related) processes express themselves as data flows, and how we leverage data to answer business-related questions. It involves some basic analytics (the stuff you'd do to create a pivot table, for example) but no heavy-lifting data analysis, like what you'd find in our field. There is no doubt that data modeling benefits from data analytics a great deal, but the reverse is also true. Let's explore why through a few examples. First of all, data modeling is fundamental in the structure of the data involved (data architects often design the databases we use) and the relationships among the various datasets, especially when it comes to an RDBS architecture. However, they also work with semi-structured data and ensure that the data is kept accessible and secure. Over the past few years, data modelers also work on the cloud, ensuring efficiency in how we access the data stored there, all while keeping the overall costs low. So, it's next to impossible to do any data-related work without consulting with a data architect. Since data modeling is the language these professionals speak, we need to know it, at least to some extent. Data modeling also involves generating reports based on the data at hand. These reports may need to be augmented using additional metrics, which may not be very easy to compute with the conventional analytics tools (slicing and dicing methods). So, we may need to step in there and build some models to make these metrics available for these reports. Before we do, however, we need to know about their context in the problem at hand. This context is something some knowledge of data modeling can help provide. Apart from these two cases, there are other scenarios where we need to leverage data modeling knowledge in our pipelines. These, however, are project-specific and beyond the scope of this article. In any case, having the right mindset in data science (and data analytics in general) is crucial for bridging the gap between our field and data modeling. This is something I explore in all of my books, particularly the Data Science Mindset, Methodologies, and Misconceptions one. So, check it out when you have a moment. Cheers. |

Zacharias Voulgaris, PhDPassionate data scientist with a foxy approach to technology, particularly related to A.I. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed