|

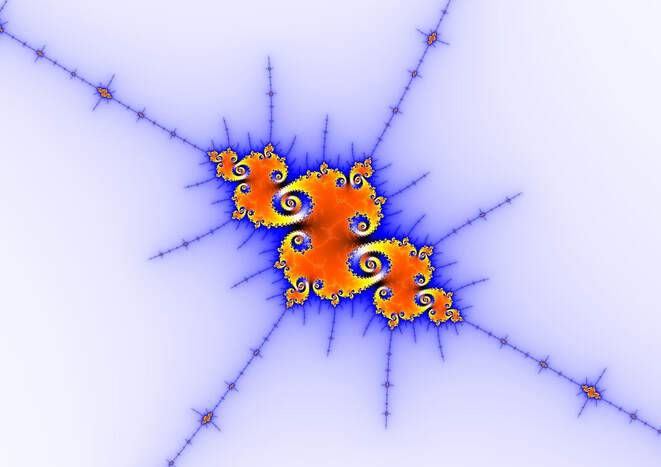

It's funny how when you think you know something, you often discover that you don't know it that much. This is particularly the case in data science, a field that holds more mystery than most people think. For example, a great deal of heuristics and models are based on the idea of similarity and there have been developed several metrics to gauge the latter. Many of them are based on distances but others are more original, in various ways. During my exploration of the hidden aspects of data science (my favorite hobby), I came across the idea of a similarity metric that is not subject to dimensionality constraints, like all of the distance-based ones, while also fast and easy to calculate. Also, this is something original that I haven't encountered anywhere else and I've looked around quite a bit, especially when I was writing the book "Data Science Mindset, Methodologies and Misconceptions" where I talk about similarity metrics briefly. Anyway, I cannot explain it in detail here because this metric makes use of operators and heuristics that are themselves original, part of my new frameworks of data analytics. Let's just say that it makes use of Math in a way that seems familiar and comprehensible, but has not been used before. Also, it yields values in [0, 1], with 1 being completely similar and 0 being completely dissimilar. The idea is to find a way to gauge similarity from different perspectives and combine the result, something that would unfortunately only work if the data is properly normalized. Given that all conventional ways of normalizing data are inherently flawed, this metric is bound to work only in properly normalized data spaces. Because such spaces are more or less balanced (even if they have outliers), the average similarity of all the data points in them is always around 0.5 (neutral similarity), something that makes the metric very easy to interpret. As with other metrics and heuristics, it's not they themselves that are the most important thing, but the doors they open, revealing new possibilities (e.g. a new kind of discernibility metric). That's why I found the picture of the fractal above quite relevant since it is all about self-similarity, a concept that led us to the discovery of a new kind of Mathematics related to Chaos. Interestingly, even with such advanced knowledge, we are unable to fully comprehend the chaos that reigns modern A.I. systems, something that has its own set of problems. So, I ask you to wonder for a moment how much better A.I. would be if it were developed using comprehensible heuristics, making it transparent and interpretable. Perhaps its thinking patterns wouldn't be as dissimilar to ours and we wouldn't see it as much of a threat.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Zacharias Voulgaris, PhDPassionate data scientist with a foxy approach to technology, particularly related to A.I. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed