|

(image borrowed from the kammerath.co.uk website)

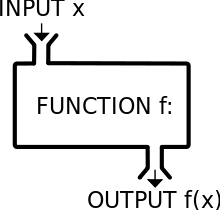

object-oriented programming paradigm (OOP) as it has been around long enough to have proven itself useful, and it has considerable advantages over the procedural programming paradigm that used to be the norm before. However, lately we have observed the emergence of a new kind of programming, namely the functional paradigm. Functional programming focuses on the use of functions rather than states and work-spaces of variables. Although this may seem alien to you if you are coming from a conventional programming background (aka imperative programming), it is very practical when dealing with complex processes as it mitigates the risk of errors, particularly logical ones that especially hard to debug. So it’s not surprising that functional programming has found its way to the data science pipeline with languages like Scala and lately Julia. Functional programming in data science allows for smoother and cleaner code, something that is particularly important when you need to put that code in production. Sometimes errors tend to appear under very specific circumstances that may not be covered in the QA testing phase (assuming you have one). This translates into the possibility of an error appearing in your pipeline when it is on the cloud (or on the computer cluster if you are the old-fashioned type!). Needless to say that this means lots of dollars leaking out of the company’s budget and doubts about your abilities as a data scientist flocking all over you. Functional programming makes all this disappear by ensuring that every part of the data science process has specific inputs and outputs, which are usually well-defined and crisp. So, if there is a chance of an error, it is not too hard to eradicate it, since it is fairly easy to test each function separately and ensure that it works as expected. So, even if Python is ideal for data science due to its breadth of packages, it may not be as robust when it comes to being put in production, compared to a functional language. This is probably why the Spark big data platform is built on Scala, a functional language, rather than Python or Java (even though Spark uses the JVM on the back-end). Also, even though Spark has a quite mature API for Python, most people tend to use Scala when working with that platform, even if they employ Python for prototyping. All in all, even though the OOP paradigm works great still and lends itself for data science applications, the functional paradigm seems to be better for this kind of tasks. Also, functional languages tend to be a bit faster overall (though low-level languages like C and Java are still faster than most functional languages). So, the choice before you is quite clear: you can either choose to remain in the OOP world with languages like Python (hedgehog approach) or venture a leap of faith in the realm of functional languages like Scala and Julia (foxy approach)…

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Zacharias Voulgaris, PhDPassionate data scientist with a foxy approach to technology, particularly related to A.I. Archives

April 2024

Categories

All

|

RSS Feed

RSS Feed